Docker Container Networking Modes

Date: 25th December 2023

This is very prosaic. But how do dockers communicate with each other.

“Docker Container Networking” typically refers to how dockers communicate with each other and other non-Docker services. A container has no information about what kind of network it’s attached to, or whether their peers are also Docker workloads or not. A container only sees a network interface with an IP address, a gateway, a routing table, DNS services, and other networking details.

Unless offcourse its explicitly forbidden from accessing the external network. This can be done by setting network driver to

There are six options available while initializing a “network” driver:

- bridge: default option

- host: removes the n/w isolation between the host and the container

- none: complete isolation of the container with the host and other containers

- overlay: useful for swarms; ideal for multi-host environments

- ipvlan: gives the user total control over the IPv4 & IPv6 addressing

- macvlan: legacy applications requiring direct connection to the physical network

there are other third-party networking options. which we’ll cover at the end of this article.

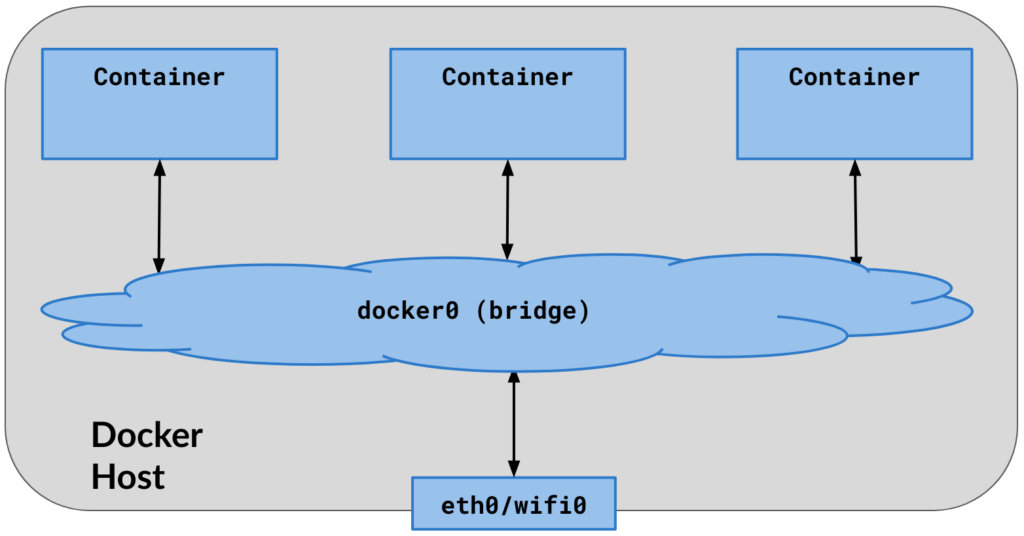

The first option is the default networking option

--network = "bridge"

1. By default, all containers are linked to the default bridge network, unless they are specifically configured to connect to another network.

2. Containers communicate with both the Docker host and the external network through IP forwarding and Network Address Translation (NAT).

3. The Docker host can establish communication with all containers by using their respective IP addresses.

4. The (default) bridge network interface, known as docker0, is visible and accessible on the host computer.

5. Upon initialization, the Docker engine locates an available network subnet on the host (typically 172.17.0.0/16) and assigns the initial IP address from that subnet (typically 172.17.0.1) to the default bridge, docker0.

6. The default bridge network does not support service discovery by default.

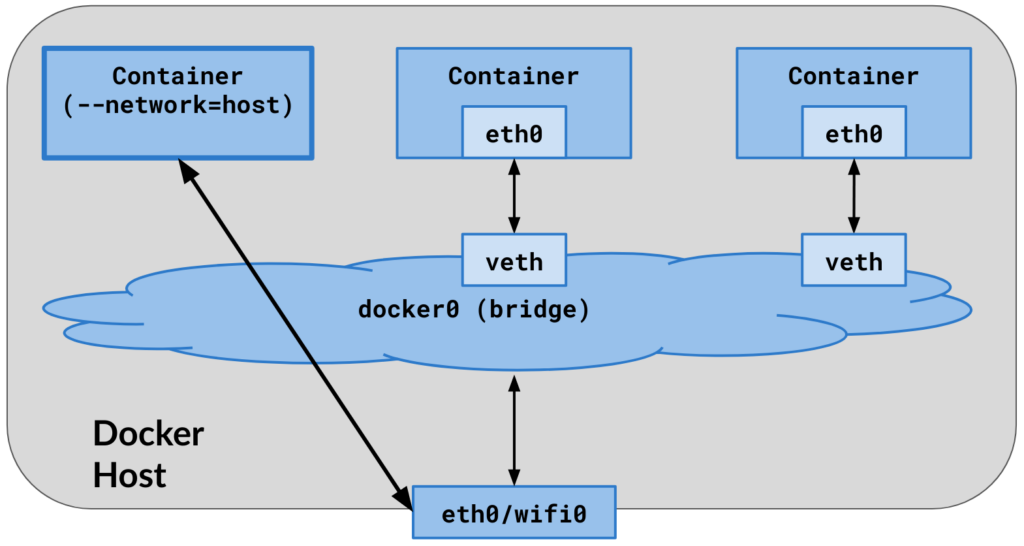

--network = "host"

1. The container utilizes the same networking namespace as the host.

2. The network stack of the container is not isolated from that of the Docker host.

3. No virtual Ethernet pairs (veth pairs) are established on the host, making all host network interfaces visible within the container.

4. The container has visibility into all routing configurations of the host computer.

5. The container does not receive a dedicated IP address; instead, it shares one with the host.

6. Port mapping, denoted by the “-p” and “-P” options, has no effect, making the application’s ports inside the container directly accessible on the host’s IP address.

7. This setup is advantageous for optimizing performance as it eliminates the need for Network Address Translation (NAT) between the host and the container, and there is no creation of a “userland-proxy” for each container port.

8. It’s important to note that host networking mode is exclusive to Linux hosts.

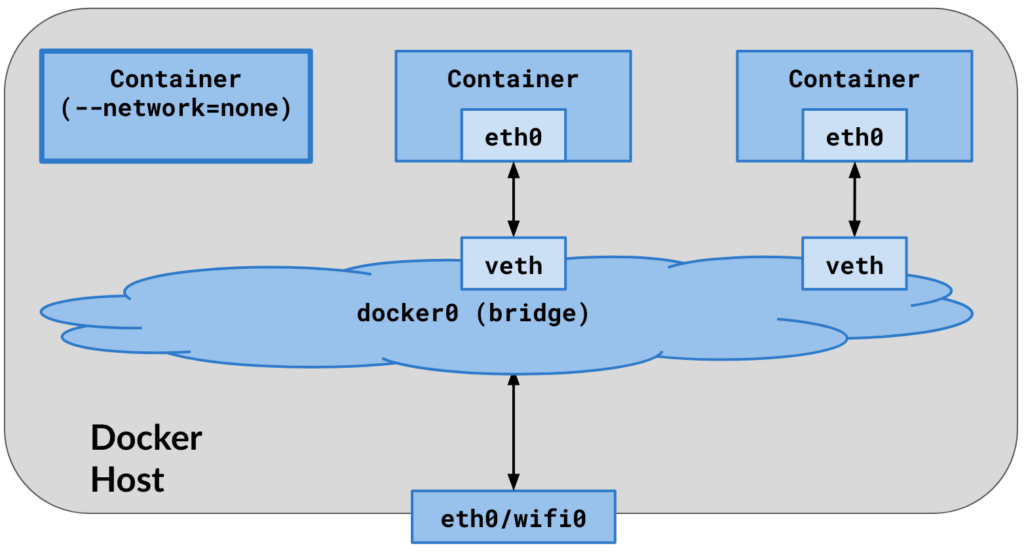

--network="none"

1. Container networking is entirely deactivated, resulting in the absence of IP connectivity, both for outgoing and incoming traffic.

2. Only the loopback device remains accessible.

3. There is no establishment of virtual Ethernet pairs (veth pairs) on the host.

4. Port mapping, signified by the “-p” and “-P” options, has no influence and is disregarded.

5. This configuration serves as a secure sandbox, providing a controlled and isolated environment for conducting tests and experiments.

To complete the discussion, here is a list of third-party networking drivers

- Weave: A virtual network connects Docker containers on separate hosts or clouds. It requires no setup or hardware. Weave networks are partition-tolerant, resilient, and ideal for uncertain environments.

- Networking and policy provider Calico. Connect containers, virtual machines, and bare-metal servers. It is simple, scalable, and effective for network policy implementation. Calico is ideal for Kubernetes networking since it enforces network policies.

- Flannel: A Kubernetes container network fabric. It connects containers on different hosts. Each host’s containers use Flannel’s subnet for IP addresses. Simple Kubernetes networking.

- Cilium: This Linux container framework plugin adds API-aware network security filtering to Docker and Kubernetes. It simplifies and powerfully enforces network-layer and application-layer security policies based on container/pod identities.

- Contiv: Flexible networking (native L3 utilising BGP, overlay using vxlan, standard L2, or Cisco-SDN/ACI) provides varied use cases. Cisco built it.